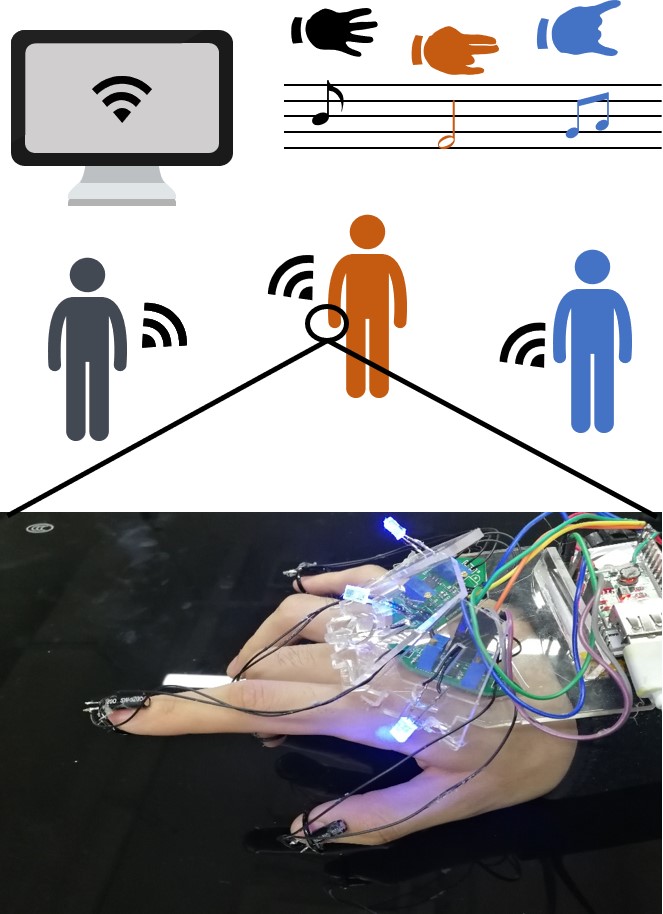

Geek !

President of PKU Makerspace (2018 - 2020)

I enjoy converting my crazy ideas into the reality! From 2018 to 2020, I has been the president of 'PKU Makerspace', an organization for 'makers' and 'Geeks' to create in GeekLab of Peking University. I often build hardware and software systems from bottom to top, such as robots, wearable devices, IoT device, software application and so on. Fortunately, most of my works is invited by some famous technology companies for exhibition, receives award in competition.