RESEARCH

3D Reconstruction and Perception

{:.center}

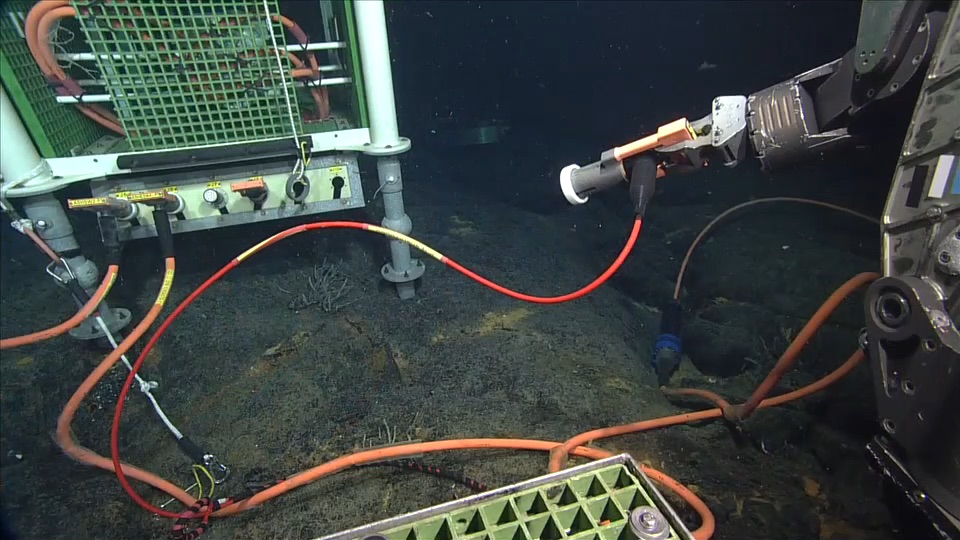

Click to watch the video, though it’s a big’n (Video from ROPOS working on behalf of the NSF OOI)

Click to watch the video, though it’s a big’n (Video from ROPOS working on behalf of the NSF OOI)

Darnit, I can’t watch that video without thinking how crazy it is that it’s all done under manual control. The pilots need to integrate so much information about the physical structure of the world, the placement of the arms and the ROV itself from just a few video feeds.

Someday, like it or not, some of this will be done more automatically. Properly designed, an automatic system will be more faster, safer (for the equipment), and won’t require multiple full-data-rate video feeds up to a ship on the surface. Of course, to do that, it needs to understand the environment around it. The ocean is a completely unstructured environment. You can’t even rely on gravity to keep things in place when you set them down. It has animals with a mind of their own!

I believe sensor fusion and SLAM-like techniques will be the first essential building block for this world understanding. You need to understand where things are, and where you are relative to those things, before you can safely avoid them, or manipulate them.

In this vein I’m interested in all sorts of robotic vision, but particularly AUV- and ROV-vision which can be used to reconstruct underwater environments in 3D.

- MaSCOT (my underwater stereo camera)

- Underwater video, stereo, multi-model (sonar+video)

- Camera calibration from video

- Fiducial markers for localization, calibration, communications (?)

Also a host of more general SLAM-related problems, many of these are also robotics and autonomy problems.

- Life-long SLAM: Allowing SLAM maps to adapt to changing scenes, and changing scene appearances (e.g. moving lights, changing seasons)

This ties into my interest in video analytics as well, as I think object identification and semantic labelling is critical to going from geometry to true scene understanding.