Lessons Learned from MAVRIC’s Brain: An Anticipatory Artificial Agent and Proto-consciousness

George Mobus

Dept. of Computer Science, Western Washington University, Bellingham, WA 98225. email:mobus@cs.wwu.edu, http://merlin.cs.wwu.edu/faculty/mobus

Abstract. MAVRIC II is a mobile,

autonomous robot whose brain is comprised almost entirely of artificial

adaptrode-based neurons. The

architecture of this brain is based on the Extended Braitenberg Architecture

(EBA) and includes the anticipatory computing capabilities of the adaptrode

synapse. We are still in the process of

collecting hard data on the behavioral traits of MAVRIC in the generalized

foraging search task. But even now

sufficient qualitative aspects of MAVRIC’s behavior have been garnered from

foraging experiments to lend strong support to the theory that MAVRIC is a

highly adaptive, life-like agent. The

development of the current MAVRIC brain has led to some important insights into

the nature of intelligent control.

Based on the nervous system of a simple invertebrate creature, this

brain and its interactions with a realistic environment, never-the-less, have

led to some important qualitative principles of design.

In this paper, we elucidate

some of these principles and using this basis along with the work of Antonio

Damasio, we develop concepts for the next generation (NG) brain. While it is a giant leap from the brain of a

simple invertebrate to that of a human (as described by Damasio), the NG brain

includes provisions for a proto-self reflecting the fundamental biological

aspects of brain architecture as described by Damasio. We have reasons to believe that this

proto-self is necessary for more advanced forms of intelligence. As with the success of the current brain,

the NG brain will depend, ultimately, on the adaptive capacity (in a

nonstationary world) of anticipatory processing elements.

1.0 INTRODUCTION

One of the strongest criticisms of the Braitenberg Architecture as a basis for designing intelligent robots has been the difficulty in identifying structures in the design phase that lead to specific behaviors. This is largely due to the seemingly ad hoc way in which BA “brains” are developed (c.f. Arkin, 1998, p. 74 for discussion of experimentally-based design). On the other hand, a great deal can be learned about the interplay of structure and behavior from the design exercise itself. In this paper, we will discuss some insights gained from the development of an Extended Braitenberg Architecture (EBA) brain for a mobile, autonomous robot. These insights are phrased in the form of “lessons” but should be viewed more as suggestions, hints or pointers to principles of design that may lead to more advanced forms of intelligence in machines.

In the final section of this paper we attempt to combine these lessons with neural models of the brain, including especially the role of neural maps, particularly body state maps (Damasio, 1994; Damasio, 1999), and with the notion of conceptual spaces (Gärdenfors, 2000). We provide the outline of an architecture of a more advanced brain-like controller for a future version of an animat. We suggest an argument for the conjecture that further advances in animat design must incorporate the notion of a proto-self and emotions as described by Damasio (1999).

2.0 MAVRIC’S BRAIN AND BEHAVIOR

MAVRIC, which stands for Mobile, Autonomous Vehicle for Research in Intelligent Control, is a mobile robot platform used to test the role of learning in foraging search by an autonomous agent (Mobus, 2000). The robot is an implementation of Valentino Braintenberg's notion of a synthetic creature that is able to learn (Braitenberg, 1984; see also Hogg, Martin, & Resnick, 1991). The architecture for the MAVRIC control software is an Extended Braitenberg Architecture (Pfeifer & Scheier, 1999, pp 350-354). This architecture is comprised of a set of concurrent processes, which collectively produce behavior based on current sensory input plus memory traces (in a biologically-realistic neural network) from prior experiences (Mobus, 1999, 2000).

MAVRIC has four light detectors arrayed so as to provide directional information from bright light sources. We use battery powered, radio controlled light towers as sources of stimulation. It also has a microphone with four narrow band pass filters used to simulate the sense of smell. Each of the four separate tones represents a different odor and the volume of the tone represents the concentration of the odor in some gradient. Battery powered, radio controlled tone generators (sound towers) provide the source of stimulus. These towers can be placed arbitrarily in a large gymnasium. Figure 1 shows the MAVRIC robot and two of the stimulus towers.

Figure 1. The MAVRIC robot and two of the stimulus towers, one

for light (left) and one for sound.

The robot base is an off-the-shelf Pioneer I robot from ActivMedia (unfortunately a discontinued line!) that we have modified rather extensively. We do use the front sonar array but only to simulate proximity detection like antennae of a snail (MAVRIC is meant to emulate the intelligence of a moronic snail!).

MAVRIC's task is to forage for resource objects. It learns that some combination of tones and light represent a resource. The towers are set in small clusters in a very large space such that the resource is sparsely and stochastically distributed. One of the four tones has been pre-designated as representing 'food'. The robot is ‘hardwired’ to recognize and move toward any tower emitting that tone. MAVRIC learns that a combination of another, neutral tone, and light, is associated with the food tone. Thereafter, MAVRIC uses light and the neutral tone cue to find the food, even when no food tone is emitted (Mobus, 2000).

Another tone has been pre-designated as representing ‘poison’. Thus MAVRIC must also learn to identify threats and avoid these. MAVRIC's world is thus composed of vast open space, cue signals (that it must learn to recognize) and both resources and threats. Its objective is to find a sufficient number of resources (under nonstationary distribution conditions) to maintain its energy level over time. Every time it encounters food it gets to feed and obtains a variable amount of energy. If it encounters poison it feels pain (causing an escape reaction) and suffers damage that leads to a reduction in energy. Also, just the act of moving in the world reduces its energy level, so it must find adequate food supplies or eventually it dies (energy == 0).

The operation of the towers is under computer control so that we can simulate an essentially infinite world for MAVRIC to search in. When it comes near the edge of a 20m X 20m area, the robot pauses while we 'scroll' the world past the robot (move towers to construct a new region in the arena and move the robot to the contralateral position on the floor). When it wakes up again, it proceeds into the new region of its world, even though the floor space is the same. From MAVRIC's point of view it must search over an infinite plain.

We are investigating the learning efficacy of a unique learning mechanism called the adaptrode, used as dynamic synapses in artificial neurons, which encodes causal correlations between any number of 'neutral' signals (stimuli) and signals that matter (physiologically) such as food odor or poison (Mobus, 1999, 2000). The neurons in the EBA network provide convergence zones where disparate signals can be correlated with appropriate temporal offsets. Our hypothesis is that causal correlation traces, over multiple time scales, is the basis of classical and operant conditioning and is fundamental to all other higher-order learning phenomena. In the current line of research we are interested in how this kind of learning increases the capacity of an agent to survive in a potentially hostile, realistic world. The latter aspect is covered by the notion of nonstationarity in environmental contingencies, an aspect that has received scant attention in agent research until now. We have speculated that the multi-time scale learning attributes of the adaptrode mitigate the confounding nature of non-homogeneous nonstationarity in associative relations in environmental objects (Mobus, 2000). MAVRIC will have to deal with changes in distribution density, and even what signals portend (are cues to) food or poison, as these relations will be changed in a nonstationary manner over the course of MAVRIC's life. One of the more interesting things to look at is how well and quickly MAVRIC adapts to abrupt changes in relations.

In the first set of experiments performed we were interested in emulating the search pattern used by many kinds of hunting animals as they forage for food. It is known from various ethological studies that animals do not use systematic search strategies when hunting in unfamiliar territory. Nor do they employ Brownian search (pure random motion as is used in many robot foraging researches). Rather they use a form of quasi-random motion (Mobus, 1999), which ensures that they will cover a larger area of the territory and not get caught in a local cycle. This form of search may be of general interest in computational processes such as data mining in huge, dynamic databases. We employ a simulated central pattern generator circuit (as in the above reference) or CPG network to generate a 1/f noise-like oscillation. This signal, when applied to the motor outputs of the robot, produces a 'drunken sailor' walk, which provides considerable novelty but is not Brownian (Mobus, 1999). A report on this movement behavior is forthcoming.

Figure 2 is a schematic representation of the general architecture of MAVRIC’s brain, embedded within the software architecture (Saphira) of the ActivMedia robot.

Figure 2. Overall software architecture of

MAVRIC’s brain. The architecture is

embedded within the ActivMedia, Pioneer’s low-level (Saphira) interface with

the robot hardware. Each rectangle with

the word ‘task’ is a concurrent task running under Saphira. Those on the left convert raw sensory data

into a form used by the neural network.

Those on the right convert the NN output to appropriate motor

commands. The two tasks at the bottom

(Disruption and Digestion), and the Depletion task (right side) are internal

‘physiological’ tasks representing body processes outside of the brain. The large rectangle in the center is the

neural network (see Fig. 3 below).

MAVRIC’s internal operations include ‘body’ processes such as digestion (the conversion of food value into energy value), depletion (the use of energy from basal metabolism), and disruption (the damage to tissue due to encounters with threats). These processes operate over longer time scales than the time base used for processing neural signals. Signals from the processes interface with the neural network via evaluative feedback to associative neurons (Figure 3).

Figure 3.

Neural network details, showing individual neurons in relation to other

tasks. Each neuron is labeled and

locally represents a specific meaning.

Most of the neurons in this diagram are simple threshold units (but use

dynamic synapses as opposed to fixed weights).

Only two neurons actually contain plastic connections, Seek and

Avoid. These neurons are the

convergence points (Damasio, 1994) for associating perceptual signals (from

neurons to the left in the diagram) with behavior (neurons to the right). Rectangles on the left of the diagram are

sensory inputs. Larger rectangles

above, below and to the right of the neurons are the body processes.

3.0 LESSONS

The following are some of the more important “lessons” that have been “learned” in the process of developing MAVRIC’s brain. These points are qualitative in nature and should be viewed more as hypotheses for principles that may be verified in future implementations of robot brains. The last section of this paper describes the strategies that we plan to use, based on these lessons, to design and implement a next generation (NG) brain. One of the main lessons learned has been that, given a strong ethological model for behavior (c.f. Lesson 3 below), it is essential to incorporate much more biologically realistic neural structure in the design of the brain (c.f. Lesson 4). We attempt to incorporate some of the structures described in (Damasio, 1999) in our thinking about the NG brain.

3.1 Lesson 1 –

Don’t Discount Local Representation

Each neuron in figure 3 is labeled as representing a specific thing, such as Light Ahead or Go Straight. Each neuron, then, represents a distinctly different concept. This form of neural architecture is called “local representation” as opposed to “distributed representation” (Hinton, et al., 1998). The vast majority of classical connectionist approaches involve distributed representation in which the representation of micro-concepts (including sub-classifications) is distributed in the weight matrix of the network. For all its virtues in terms of storage efficiency, generalization and robustness (ibid) there is a long-standing debate regarding the fundamental incompatibility of distributed representation with symbolic AI or good old fashion AI (GOFAI) (Minsky, 1990). The basic problem is that there is no single point that can be tagged with a meaningful label, thus making it impossible to reconcile the two forms of representation. Neural processing is ideally suited to robotic, or any analogical, interactions with a world of real physical forces. But classical neural networks based on distributed representation cannot easily be interfaced with symbolic systems.

Local representation does not suffer this problem. In local representation each neuron or cluster of neurons, takes its meaning from its role in the network. Its activation level represents the degree to which its represented meaning is being asserted in a logic-like sense (even if fuzzy). In MAVRIC, a purely behavior-based robot, there is no actual interface with a symbolic system as such. However, it is clear that the structure of MAVRIC’s brain suggests rule-like performance. For example a rule of the form:

IF LightAhead AND Seek THEN GoStraight; SlowDown,

is easily derived from the network.

Another

consideration which favors local vs. distributed representation concerns the

loading problem (Judd, 1998). Finding a

set of weights, which meet the convergence criteria for a given pattern set,

is, in general, intractable. Thus

training a classical neural network on a given problem domain is NP-Hard. Classical NNs are doomed to restriction to

small problem domains.

Finally,

classical NNs, including those based on reinforcement learning, cannot easily,

if at all, deal with nonstationary environments (Mobus, 2000).

It

is certainly true that the complaint against the type of network as shown in

figure 3 regarding the difficulty of designing such a brain is valid. It is incredibly difficult to construct a

fully functioning nervous system using local representation neurons. However the problem need not be insoluble on

two accounts. The first is that our

knowledge of the functional architecture of the brain is growing incredibly

fast as compared with just a decade ago (Damasio, 1999). New real-time imaging methodologies such as

fMRI promise to fill in the gaps in our understanding of what structures

do. Biologically-inspired roboticists

are turning more and more to this understanding for guidance in constructing

brain-like control systems (c.f. Pfeifer & Scheier, 1999; Mobus,

2000).

The

second is that even a brain as “simple” as MAVRIC’s demonstrates certain

large-scale patterns that may, if elucidated as principles of design, allow a

scale up to much more complex brains.

From the lessons described below we will describe what we envision as

the next generation brain for a new MAVRIC.

3.2 Lesson 2 –

Value Systems Should Operate Over Multiple Time Scales

The use of a value system to modulate neural plasticity has been shown to produce very biological-like behavior in artificial agents (Mobus, 1999, 2000; Sporns et al., 2001; see also Pfeifer & Scheier, 1999, pp 469-474). Most work to date has been on value systems that operate over long, but singular time scales (Sporns et al., 2001). MAVRIC’s learning system, based on Adaptrodes, requires a series of value systems to provide the intermediate- and longer-term evaluative feedback for producing longer time scale potentiation of memory traces (Mobus, 2000). This is accomplished by recognizing a cascade of reward/punishment processes that follow an associative learning experience.

In MAVRIC, the sound of the Tone0 (Odor0 in figure 3) represents the “smell” of food, the unconditioned stimulus, which gates the instantaneous trace of any learning synapse, any conditioned stimulus connection, in Seek into the short-term memory level. The combination of TouchAhead and Odor0, however, represents the acquisition of food (eating), which provides a longer-term reward to the Seek neuron (the R input to the Seek neuron in figure 3). This signal fluctuates over very much longer time scales (minutes) as compared with the short-term memory-gating signal (seconds). As has been shown in (Mobus, 1999) and earlier works cited therein, this multi-time domain dynamic is important for a piecewise stationary process to approximate a nonstationary one for anticipatory learning.

As the food value rises over minutes, a “digestion” process converts food into energy, which rises over the next hour(s), a time scale very much longer than that of feeding. The signal arising from increases in energy levels is used to gate the intermediate-term memory traces in Seek learning synapses into long-term memory traces. This is a final confirmation (the C input to Seek in figure 3) of encoding memories of relevant features in the environment that led to the initial feeding act.

Each evaluative feedback system provides an opportunity to marginally increment memory traces based on results. The mere smell of food does not mean the patch of food found will be substantial or sufficient to meet the needs of the agent. Similarly, the food ingested may be diluted with non-nutritious material so that the volume taken in need not result in high energy gain. What counts in the end is the energy gain. At each time scale differential learning takes place relative to the actual outcome. This multi-staged feedback, a multi-time scaled value system, in the context of adaptrode encoding, solves the long-term credit assignment problem arising from scenarios such as just described.

3.3 Lesson 3 – A

More Biologically-realistic Ethological Model Informs Design

Sending robots out to “find” things and return them to a central location or “feed” is a popular task on which to evaluate learning performance (c.f. Arkin, 1998, pp. 130: Sporns, et al., 2001). Although inspired by biological examples of foraging such as ant colony food gathering, it turns out that most experimental designs are highly constrained and the search arena is little more than a toy situation. In fact, foraging – as in hunting – is a much more complex task than has been appreciated by roboticists (Mobus, 2000). An increasing number of researchers are turning to neuro-ethological modeling to develop robotic designs, particularly controller designs, with the concept of ecological niches (Arkin, 1998, pp. 51). Our only contention with this is that researchers have not gone far enough in this direction. The foraging search task as described in (Mobus, 2000) and briefly above, is a stronger ethological model and thus puts stronger requirements on the learning systems used in adaptive robots. MAVRIC’s brain much more strongly reflects these requirements (see Lesson 4 below).

3.4 Lesson 4 –

MAVRIC’s Brain Reflects Anatomical Features of Real Brains

MAVRIC’s brain was designed to fulfill an ethological purpose – the foraging search task. There was no a priori attempt to model the wiring diagram after any particular neuroanatomically correct brain. On reflection, however, it is clear that the structure of the artificial brain strongly resembles the structure of a real invertebrate brain with hints at what might be developed into discrete structures in a vertebrate-analogue brain.

Behind the cephalic sensory maps (light, touch and sound/olfactory sensors) lies a region of perceptual processing. Perceptual maps (specifically the touch and light sensory modalities) preserve and enhance the topological features of objects encountered (LightRight, LightAhead, LightLeft, etc.). At the same time, some sensory and/or perceptual information goes right to processing centers that deal with preserving basic biological (-like) functions such as feeding and pain detection.

The associative network provides a convergence zone for salient signals from these centers and direct sensory or perceptual input, allowing the robot to form dispositions regarding the appropriate anticipatory behaviors with respect to those inputs (Damasio, 1994 & 1999). This network maps perception to action. The latter is accomplished through transformations (e.g. seek-left/seek-right or avoid-left/avoid-right) or motor programs and primitive motor commands (go-left, go-right, etc.).

In retrospect, the requirements of the strong ethological model (foraging search) put functional constraints on the design of the network that resulted in brain-like structures. On reflection, this should not be surprising. It is just another perspective on fitness, but what it suggests is that designs of artificial brains might better be informed by closer attention to real brain structures. Evolution has done a wonderful job of producing neuro-ethological fitness. Future designs will incorporate larger-scale structures (functions) from models of real brains.

3.5 Lesson 5 –

Partitioning the World: Conceptual Spaces

What

MAVRIC’s brain does as it learns is it partitions the world of sensory and

perceptual forms into conceptual categories.

One category is for things that lead to reward (food), and should,

therefore, be sought and the agent can anticipate a reward by doing so. Another is for things that lead to

punishment (poison) and should, therefore, be avoided. A third category is for everything else,

perceived but unassociated with either reward or punishment.

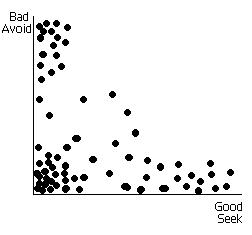

Conceptual spaces have been advanced as a third, intermediate and ameliorating form of representation between connectionist and symbolic forms (Gärdenfors, 2000). Actual representation of concepts is accomplished through vector spaces where quantification of similarity is more readily accomplished. One of the important insights gained in visualizing concepts in this framework is the way in which the world, through perceptual experience, is partitioned into like and dislike objects (concepts). A conceptual space is constructed by combining quality dimensions corresponding to sensory modes or higher-order perceptual modes (ibid). Concepts, corresponding with real things in the world, emerge as vector points or small regions in the space. Figure 4 shows a hypothetical conceptual space based on the MAVRIC Seek/Avoid associative network.

Figure 4.

Each point in the space represents the projection of a different

perceptual object onto the plane formed from fixed quality dimensions, bad and

good. Most objects are essentially

neutral and cluster near the origin; some are more or less strongly associated

with one dimension or the other. A few

are partially associated with both (see text below).

In MAVRIC’s brain, the Seek and Avoid associative neurons work to partition the world into five sets: Good/Bad/Anticipate_good/Anticipate_bad/Neutral. The first two sets are crisp, genetically given and form the basis of the distinction between environmental influences, which either support or threaten the survival of the individual. MAVRIC seeks the good (food) and avoids the bad (poison) automatically or reactively. As we have already shown (Mobus, 1999), MAVRIC learns to associate cue events with either seek or avoid behavior through adaptrode learning. This partitions the set of Neutral objects into anticipatory stimuli, either good or bad, and leaves all unassociated objects in the Neutral set. The anticipatory or cue stimuli sets, as well as the Neutral set, are fuzzy; membership in any of the three remaining sets is graded. A single object can be partially associated with both seek and avoid, though usually more strongly with one than the other. In (Mobus, 2000) and earlier works, it was shown how such fuzzy associations, partitioned across time domains, solve the destructive interference problem.

4.0 STRATEGIES FOR

A NEXT GENERATION BRAIN

The next MAVRIC platform will include a much larger number of sensory modes and channels, as well as additional behavioral outputs. A front gripper will be used to pick up graspable (see below) objects. We will replace the simplistic light sensors with binocular vision, including a pan-tilt capability. The standard video resolution will be preprocessed to approximate retinal images with lower resolution on the periphery and higher (but still course, compared with standard resolution) resolution in the center. Twin semi-directional microphones using DSP-based FFT filters will provide binaural input. A conductivity detection circuit will be used to implement a ‘taste’ system (c.f. Sporns, et al., 2001). Thermal sensors will be distributed both internally and externally to obtain heat data (see comments on body state maps below). Our plan is to use portable hair blowers to produce temperature zones in the environment. A digital magnetic compass and inclinometers will be used to supply additional body orientation data. Shaft encoders will provide motor systems feedback. Finally, actual battery charge level will be used to determine MAVRIC’s state of “hunger”.

This highly enriched sensory capacity coupled with a richer, more complex and dynamic environment, will challenge the design of an artificial brain. The general strategy will be to expand on the EBA plan, develop a more complex ethological model, including moving objects, and incorporate neural structures/wiring as explicated from real brains.

4.1 From Lessons 1

& 4 – Combining Local Representation and Neural Maps

Damasio’s description of neural maps, and images formed therein, can be combined with the notion of local representation in abstraction hierarchies. That is, for example, raw sensory data from the periphery can be used to learn local features in a higher-level map, preserving the topological properties of peripheral regions. Single neurons (or small populations of neurons) in this map will represent specific features in the region. These can be combined at a yet higher-level map into local representations of meta-features, again, preserving topological relations. Levels in the hierarchy are determined by the ethological need to identify and abstract objects in the sensory modality (which includes body sense or proprioceptive modalities).

Maintaining local representations in such a hierarchy provides an opportunity to interface the neural architecture with symbolic processing for more abstract reasoning abilities. Representations at the highest levels in a hierarchy have grounded semantics by virtue of being abstractions from the low-level sensory correlations (based on value system-based encoding).

4.2 From Lesson 3

– A Stronger Ethological Model

The current MAVRIC, like a snail, does not have a particular place it calls home. It simply wanders through the environment looking for food. The next MAVRIC will build upon the foraging search task as described above. In addition to simply finding food, the new MAVRIC will also undertake external navigation tasks with a home reference base. That is, MAVRIC will forage and return to a home site when not actively looking for food or other resources, or when frightened by threats. To do this it must be able to learn and represent fixed landmark cues.

NG MAVRIC, when not hungry, will forage for other resources for something like nest building behavior. With its abilities to learn affordances such as graspability and pushability (see below) it will identify objects it may use in constructing a nest and obtain these for that purpose.

A much more complex, but integrated behavioral repertoire will challenge the effort to design a brain capable of providing the appropriate functionality. However, as this, by lesson 3, will better inform the choices of functional relationships between neural structures it will be essential in the exploration of more advanced brains.

4.3 From Lessons 2

& 5 – Partitioning the World of Affordances

The ability to partition the world into complementary fuzzy categories suggests a strategy for developing more advanced brain architectures that support the resolution of concepts during a developmental phase of learning. We begin, as in the case of seeking good and avoiding bad, with partitioning the world into conceptual categories based on fundamental, or basic affordances (Gibson, 1979; Arkin, 1998). Physiologically important affordances have a similar status as that held by homeostatic factors, and need to be learned by juveniles in a wide variety of studied species. In the case of seek and avoid behavior the conditioning factor (value) was pleasure and pain, respectively. It is possible to consider a similar arrangement for acts such as grasping (object is graspable) or pushing (object is pushable), as when a baby learns to grasp or push objects.

Attributes of the object (perceptual cues) arrive earlier to the site of convergence. Later signals from hardwired circuits detecting something in a grasp (or being pushed) provide the gating needed to encode the object as having the particular affordance, at least in short-term memory. Exercising the interaction with an object based on the affordance should lead to a longer-term evaluative feedback, as above in the case of feeding. For example, after grasping an object the subsequent successful movement of the object may lead to an intermediate-term reward signal gating the short-term memory trace into intermediate-term form. One can imagine that for a baby, successful grasping and movement of the object to the mouth, a primitive form of intended action, could lead to a confirming signal over a yet longer time scale. This behavior certainly fits in with the foraging search task as a prelude to the feeding behavior.

As with the Seek/Avoid partition space, one should think of opponent-like affordances. For graspable, one should consider the antithesis, ungraspable; for pushable the antithesis is fixed (or unmoving). Thus a wholly similar architecture as shown above would act to encode affordance/anti-affordance associations. The system would partition objects (by their attributes or features) into Anticipate_affordance/Anticipate_anti-affordance/ Unknown fuzzy sets. Encounter with any object having features similar to previously learned objects will elicit the learned behavior directed toward fulfilling the ecological purpose of the affordance, i.e., the agent will attempt to grasp something that looks vaguely graspable. Conversely, objects that have attributes in the Anticpate_anti-affordance will fail to elicit grasping behavior.

Objects can become associated with any number of affordances and with the basic good/bad partition. These are in no way mutually exclusive. An object may be graspable and good – as in the case of a piece of food or nesting material.

4.4 Construction of the Proto-Self

The current implementation of MAVRIC is a zombie. MAVRIC is clearly aware of and responds appropriately to external stimuli. But the robot is blissfully unaware of its own physical state, or, more importantly, how external stimuli change that state (Damasio, 1999).

Damasio (1999) has linked the internal stability of an organism with the emergence of a primitive component of consciousness he calls the proto-self. “It is intriguing to think that the constancy of the internal milieu is essential to maintain life and that it might be a blueprint and anchor for what will eventually be a self in the mind,” (Damasio, 1999, pp. 136, italics in the original). The proto-self originates in a set of body state maps, which includes maps representing reflex actions (withdrawal from pain), maps representing homeostatic (internal milieu) conditions, and maps of the postural (kinesthetic) states. Damasio goes on in (1999) to develop a model of core consciousness based on yet higher level maps wherein representations of the relationship between external objects (the maps suggested in 4.1) and changes in the body state are formed. That is, the agent becomes self-aware. Activation of images (local representations) in this map is used to focus attention, through recurrent projections back to the object and body state maps, on salient relationships as they happen. Additionally, imagined objects can elicit activation of body states similar to those that are caused by the real object.

Damasio’s thesis is that this, still somewhat primitive, level of consciousness is necessary to more fully connect the agent with the salient elements of its environment. In terms of the language of anticipation (and by inference, the encoding of causal relations in adaptrode neurons) an agent can lead to the encoding of causal chains either from intention (imagination) or from situation demand (occurrence of an object). In other words, the thought of an object imagined (c.f. Mobus, 1999) can create a chain or sequence of anticipations for body states/actions necessary to obtain the object.

The NG MAVRIC will include an extensive set of body state maps in an attempt to start a line of research on the proto-self and its role as a basis for core consciousness.

ACKNOWLEDGEMENTS

This work has been supported, in part, by a grant from the National Science Foundation (USA), IIS-9907102, and by a grant from Western Washington University, Office of the Provost.

References

Arkin, R.C. (1998). Behavior-based Robotics. Cambridge, MA, The MIT Press.

Braitenberg, V. (1984). Vehicles: Experiments in Synthetic Psychology, Cambridge MA, The MIT Press.

Damasio, A. (1999). The Feeling of What Happens: Body and Emotion in the Making of Consciousness. New York, Harcourt Brace & Company.

Damasio, A. (1994). Descartes’ Error: Emotion, Reason and the Human Brain. New York, Avon Books.

Gärdenfors, P. (2000). Conceptual Spaces: The Geometry of Thought. Cambridge, MA. The MIT Press.

Gibson, J. J. (1979). The Ecological Approach to Visual Perception. Boston, MA. Houghton Mifflin.

Hinton, G. E., McClelland, J. L. & Rumelhart, D. E. (1989). Distributed Representations. In Rumelhart, D. E. & McClelland, J. L (eds.) Parallel Distributed Processing: Explorations in the Microstructure of Cognition. Volume I: Foundations. Chapter 3, pp. 77-109. Cambridge, MA, The MIT Press.

Hogg, D. W., Martin, F. & Resnick, M. (1991). Braitenberg Creatures, MIT Media Laboratory, URL: http://lcs.www.media.mit.edu/people/fredm/papers/vehicles/

Judd, S. (1988). On the Complexity of Loading Shallow Neural Networks. Journal of Complexity, 4, pp. 177-192, Academic Press.

Minsky,

M. (1990). Logical vs. Analogical or Symbolic vs. Connectionist or Neat vs.

Scruffy. in Winston, P. (ed), Artificial Intelligence at MIT, Vol. 1.

Cambridge MA, The MIT Press.

Mobus, G. (2000). Adapting Robot Behavior to Nonstationary Environments: A Deeper Biologically Inspired Model of Neural Processing. Proceedings, SPIE - International Conference: Sensor Fusion and Decentralized Control in Robotic Systems III, Biologically Inspired Robotics, Boston, MA, Nov. 6-8, 2000, pp. 98-112.

Mobus, G. (1999). Foraging Search: Prototypical Intelligence, Third International Conference on Computing Anticipatory Systems, Liege, Belgium, August 9-14, 1999. Daniel Dubois, Editor, Center for Hyperincursion and Anticipation in Ordered Systems, Institute of Mathematics, University of Liege.

Pfeifer, R. & Scheier, C. (1999). Understanding Intelligence, Cambridge MA., The MIT Press.

Sporns, O., Almassy, N., & Edelman, G. (2001). Plasticity in Value Systems and Its Role in Adaptive Behavior. Adaptive Behavior, 8:2, pp. 129-148, Honolulu, HI, International Society for Adaptive Behavior.